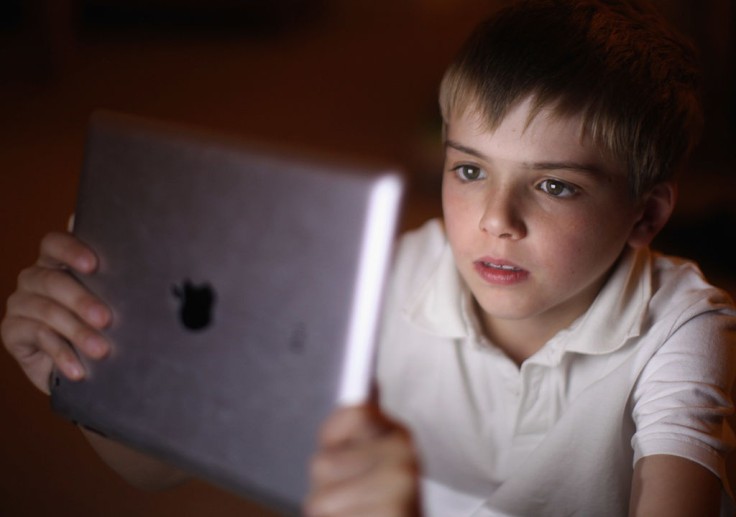

Apple, the tech giant and iPhone maker, is planning to release a new feature that will help crack down on people who have child sex abuse materials (CSAM) on their devices in the U.S.

According to the official announcement, Apple has designed an artificial intelligence (AI) system called the "NeuralHash" that will work to identify if the user stored these materials on Apple gadgets like the iPhone. The tech company said that NeuralHash will be able to detect images from the users' device and then tag these CSAMs with various checks before it can be cleared for upload on Apple's iCloud servers.

If the AI still detects the questionable content, a human reviewer will then make another assessment and decide if the account should be blocked and reported to the police. The company said that its AI can also identify images that have been cropped or edited, using a comparison database from the U.S. National Center for Missing and Exploited Children (NCMEC), as well as several child safety organizations around the world.

Read Also : Electric Shock Devices Used on Students With Behavioral Issues Can't Be Banned, Court Rules

Privacy Worries, Spying on Users

Word of the new feature was first revealed by Matthew Green, a cryptography expert from the Johns Hopkins University. The professor said on Twitter that law enforcement agencies across the world have been asking for this tool to help help curb incidence of child pornography.

However, the new feature has sparked worries that it could be abused by authorities to spy on Apple users. According to Green, since the company has shown that it can build a system that can scan content, then more governments will "demand it from everyone."

Greg Nojeim of the Center for Democracy & Technology's Security & Surveillance Project agreed that this move will make the digital infrastructure open to abuse, taking away the faith of users on Apple's data security.

For years, Apple has been resistant of government pressure to come up with a system that can help law enforcement because it will become a security and privacy problem for all of its users. Security experts have applauded their stance but with these new changes, Green said that the tech giant is closer to becoming a surveillance tool.

Other Safety Features to Protect Kids

The company also revealed that it will have other safety features to protect children from online harm, such as blocking explicit photos that the child receives on iMessage or an intervention system that will prevent a user from accessing CSAM-related content through Siri or Search.

For instance, if a child receives CSAM on iMessage, the photo will be immediately blurred out and the child will get a warning, as well as suggestions to helpful resources for their awareness and education. If they do view the photos or send it to their contacts, their parents will receive a notification.

Siri's feature, on the other hand, will be updated with a feature informing the user of the "harmful and problematic" content they intend to search.

NeuralHash will come with the iOS15 update for mobile devices and the macOS Monterey updates for computers. It has a target release date within the next two to three months.